Image credit: Springer

Image credit: SpringerAbstract

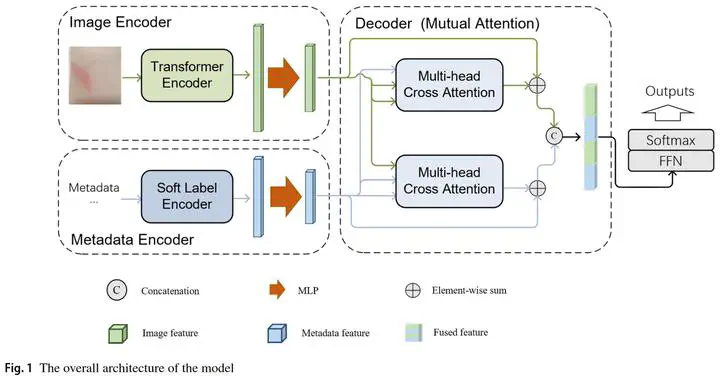

Skin disease cases are rising in prevalence, and the diagnosis of skin diseases is always a challenging task in the clinic. Utilizing deep learning to diagnose skin diseases could help to meet these challenges. In this study, a novel neural network is proposed for the classification of skin diseases. Since the datasets for the research consist of skin disease images and clinical metadata, we propose a novel multimodal Transformer, which consists of two encoders for both images and metadata and one decoder to fuse the multimodal information. In the proposed network, a suitable Vision Transformer (ViT) model is utilized as the backbone to extract image deep features. As for metadata, they are regarded as labels and a new Soft Label Encoder (SLE) is designed to embed them. Furthermore, in the decoder part, a novel Mutual Attention (MA) block is proposed to better fuse image features and metadata features. To evaluate the model’s effectiveness, extensive experiments have been conducted on the private skin disease dataset and the benchmark dataset ISIC 2018. Compared with state-of-the-art methods, the proposed model shows better performance and represents an advancement in skin disease diagnosis.